The automobile manufacturing industry, according to Ben Kelley in his recent commentary for the Journal of Public Health Policy, has a history of ignoring safety recommendations meant to protect drivers—sometimes from their own folly. In 1965, for example, Consumer Reports attempted to shift blame from manufacturer to driver so as to reprieve manufacturers from adding modifications to cars. Seat belts and airbags, which were mandated in the 1960s to “increase crashworthiness of motor vehicles,” were initially met with intense resistance from auto companies. Today, the industry again faces similar challenges in design and safety.

About 1.25 million deaths per year are caused by automobile accidents, and it has been found that “driver behavior” is a major cause. Alcoholism, aging, drowsiness, “macho attitude,” speeding, avoiding seat belt use, and suicidal behavior are but a few risk factors for death associated with road travel.

Automated—or self-driving—vehicles (AVs) have sped onto the scene, fueled by the tech world, at a rapid pace. Though AVs were originally intended to address avoidable deaths caused by human error, Kelley says that a lack of caution in developing them actually puts consumers in danger.

What would an automated car choose when faced with the decision between “a potentially fatal collision with an out-of-control truck or heading up on the sidewalk and hitting pedestrians?” asked former NTSB Chairman Christopher Hart.

A Brookings Institute report by Darrell M. West suggests that autonomous vehicles have the potential to “remove human error and bad judgment from vehicular operations altogether,” thereby reducing traffic deaths. Barriers to these benefits include infrastructure, weather, hacking threats, and public acceptance.

Most cars today are largely driven by humans, situating them within Level 2 of the International Society of Automotive Engineers’ “hierarchy of automation.” Somewhere between Level 2 and Level 3 are features that are usually considered luxury add-ons like automatic emergency braking, forward collision warnings, and backup cameras. Level 3 cars are semi-autonomous, which means human drivers can take over if needed. Level 4 are fully self-driving. U.S. manufacturers want to skip Level 3 because, according to them, “it’s safer and less costly than pursuing a midway point of limited self-driving.”

This may be true. But there are many considerations that must be taken into account before AV technology can be proven safer and more cost effective than Level 2 cars.

One major question that will need serious consideration is, who is liable should a self-driving car crash? The National Transportation Safety Board (NTSB), an independent non-regulatory federal agency that looks into significant accidents and offers safety recommendations based on its findings, has warned against an “over-reliance on automation” and a “lack of safeguards,” both of which they found responsible for a recent fatal Tesla crash in Florida. What would an automated car choose when faced with the decision between “a potentially fatal collision with an out-of-control truck or heading up on the sidewalk and hitting pedestrians?” asked former NTSB Chairman Christopher Hart.

“This is different than beta-testing, say, office software. If your office app crashed, you might just lose data and some work. But with automated cars, the crash is literal…”

A second consideration might be, how do programmers code ethics? Kelley quotes an unnamed ethics educator who said, “This is different than beta-testing, say, office software. If your office app crashed, you might just lose data and some work. But with automated cars, the crash is literal. Two tons of steel and glass, crashing at highway speeds, means that people are likely to be hurt or killed. Even if the user had consented to this testing, other drivers and pedestrians around the robot car haven’t.”

On September 20, 2016, the National Highway Traffic Safety Administration (NHTSA) released the Federal Automated Vehicles Policy that is supposed to provide guidelines for automated vehicles. Instead of offering safety recommendations and regulations, however, the policy simply provides “a framework upon which future Agency action will occur.” As Kelley explains, “at least for the time being, the government is depending on corporate volunteerism rather than regulation to make AVs adequately safe.”

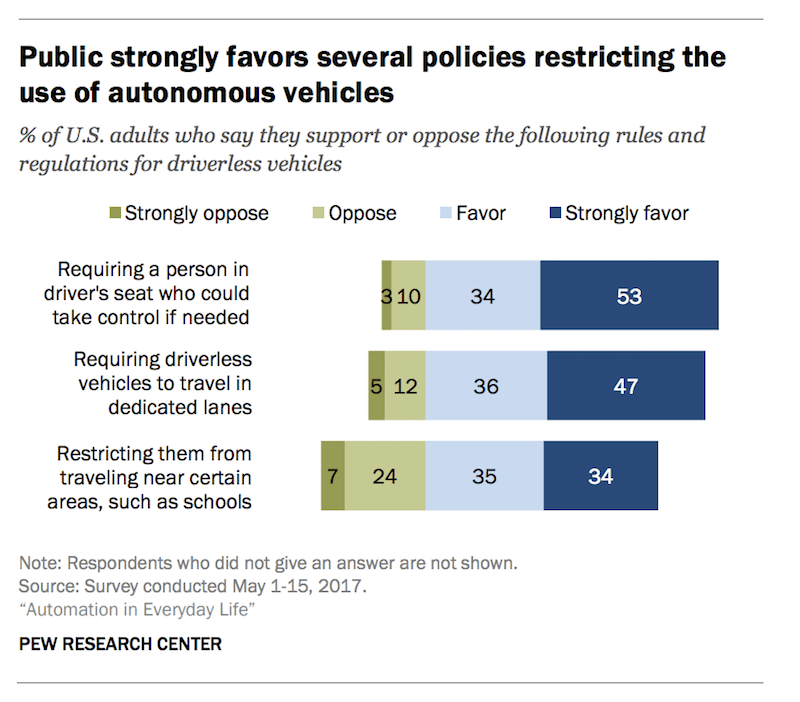

As an October 2017 Pew Research Center poll shows, consumers have some hesitation surrounding the wide dissemination of AV technology. The graph below indicates that people would likely feel more comfortable with regulations that the NHTSA has so far avoided outlining.

Kelley argues that current policies do not support the gathering of evidence needed for the scale-up desired by the auto industry. The NHTSA is currently unequipped to roll out safe and effective changes to the automobile industry. With a lack of funding and “undue industry influence in its decision making,” the agency’s ability to set standards that are in the interest of the consumer are limited, which is leading to an uncontrolled “rush to the market.”

Feature image: Thomas Meier, amz_driverless, Acceleration without driver, used under CC BY-NC 2.0. Graph from Automation in Everyday Life, Pew Research Center, p. 36.